AI tools for data structure

Related Tools:

MetaGPT

MetaGPT is an advanced AI tool that leverages the power of natural language processing to generate human-like text. It uses the latest deep learning models to understand and produce text in various styles and tones. With MetaGPT, users can easily create content for articles, essays, emails, and more with just a few clicks. The tool is designed to assist writers, marketers, students, and anyone looking to enhance their writing productivity and creativity.

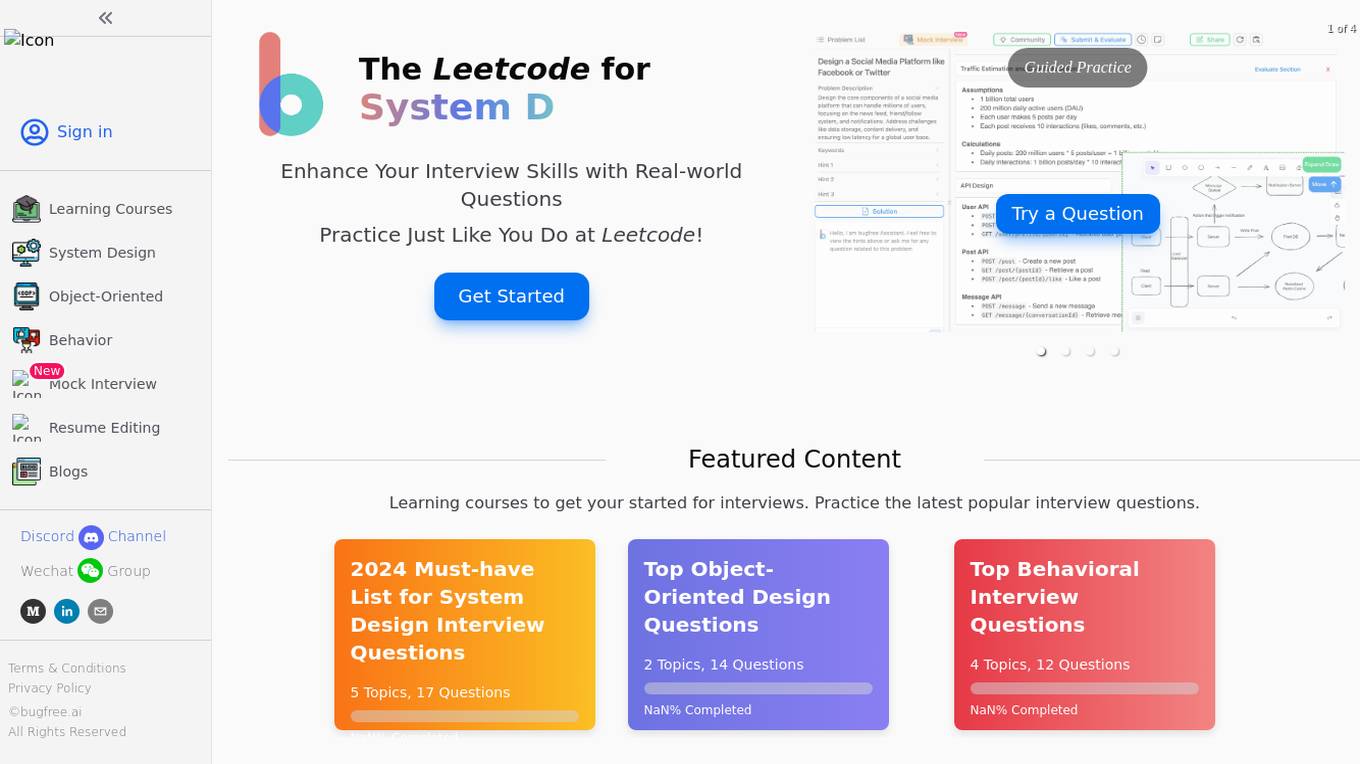

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

Snaplet

Snaplet is a data management tool for developers that provides AI-generated dummy data for local development, end-to-end testing, and debugging. It uses a real programming language (TypeScript) to define and edit data, ensuring type safety and auto-completion. Snaplet understands database structures and relationships, automatically transforming personally identifiable information and seeding data accordingly. It integrates seamlessly into development workflows, providing data where it's needed most: on local machines, for CI/CD testing, and preview environments.

Envistudios

Envistudios offers AI-powered solutions for business excellence through their innovative SaaS products 'Documente' and 'Infomente'. These platforms leverage artificial intelligence, natural language processing, and machine learning to provide intelligent document processing and generative business intelligence. Envistudios aims to empower businesses by unlocking insights from data, facilitating data-driven decision-making, and optimizing workflows.

AI Placeholder

AI Placeholder is a free AI-Powered Fake or Dummy Data API for testing and prototyping. It leverages OpenAI's GPT-3.5-Turbo Model API to generate fake content. Users can directly use the hosted version or self-host it. The API allows users to generate any data they can think of, with the ability to specify rules for data retrieval. It supports various content types like tweets, posts, Instagram posts, and more. The application is designed to assist developers and testers in creating realistic but non-sensitive data for their projects.

FetchFox

FetchFox is an AI-powered web scraping tool that allows users to extract data from any website by providing a prompt in plain English. It runs as a Chrome Extension and can bypass anti-scraping measures on sites like LinkedIn and Facebook. FetchFox is designed to quickly gather data for tasks such as lead generation, research data assembly, and market segment analysis.

DMSLOG.Ai

DMSLOG.Ai is an AI tool designed for Smart Port terminal optimization, decongestion, and decarbonation. It offers solutions powered by AI, machine learning, and digital twins to transform container terminals into Smart Ports, providing quick ROI, decongestion, and decarbonation. The tool is used globally on a daily basis, offering plug-and-play AI solutions for various terminal operations and carbon footprint monitoring.

Isomeric

Isomeric is an AI tool that uses artificial intelligence to semantically understand unstructured text and extract specific data. It helps transform messy text into machine-readable JSON, enabling tasks such as web scraping, browser extensions, and general information extraction. With Isomeric, users can scale their data gathering pipeline in seconds, making it a valuable tool for various industries like customer support, data platforms, and legal services.

Airparser

Airparser is an AI-powered email and document parser tool that revolutionizes data extraction by utilizing the GPT parser engine. It allows users to automate the extraction of structured data from various sources such as emails, PDFs, documents, and handwritten texts. With features like automatic extraction, export to multiple platforms, and support for multiple languages, Airparser simplifies data extraction processes for individuals and businesses. The tool ensures data security and offers seamless integration with other applications through APIs and webhooks.

PDFMerse

PDFMerse is an AI-powered data extraction tool that revolutionizes how users handle document data. It allows users to effortlessly extract information from PDFs with precision, saving time and enhancing workflow. With cutting-edge AI technology, PDFMerse automates data extraction, ensures data accuracy, and offers versatile output formats like CSV, JSON, and Excel. The tool is designed to dramatically reduce processing time and operational costs, enabling users to focus on higher-value tasks.

jsonAI

jsonAI is an AI tool that allows users to easily transform data into structured JSON format. Users can define their schema, add custom prompts, and receive AI-structured JSON responses. The tool enables users to create complex schemas with nested objects, control the response JSON on the fly, and test their JSON data in real-time. jsonAI offers a free trial plan, seamless integration with existing apps, and ensures data security by not storing user data on their servers.

Dataku.ai

Dataku.ai is an advanced data extraction and analysis tool powered by AI technology. It offers seamless extraction of valuable insights from documents and texts, transforming unstructured data into structured, actionable information. The tool provides tailored data extraction solutions for various needs, such as resume extraction for streamlined recruitment processes, review insights for decoding customer sentiments, and leveraging customer data to personalize experiences. With features like market trend analysis and financial document analysis, Dataku.ai empowers users to make strategic decisions based on accurate data. The tool ensures precision, efficiency, and scalability in data processing, offering different pricing plans to cater to different user needs.

Jsonify

Jsonify is an AI tool that automates the process of exploring and understanding websites to find, filter, and extract structured data at scale. It uses AI-powered agents to navigate web content, replacing traditional data scrapers and providing data insights with speed and precision. Jsonify integrates with leading data analysis and business intelligence suites, allowing users to visualize and gain insights into their data easily. The tool offers a no-code dashboard for creating workflows and easily iterating on data tasks. Jsonify is trusted by companies worldwide for its ability to adapt to page changes, learn as it runs, and provide technical and non-technical integrations.

Lettria

Lettria is a no-code AI platform for text that helps users turn unstructured text data into structured knowledge. It combines the best of Large Language Models (LLMs) and symbolic AI to overcome current limitations in knowledge extraction. Lettria offers a suite of APIs for text cleaning, text mining, text classification, and prompt engineering. It also provides a Knowledge Studio for building knowledge graphs and private GPT models. Lettria is trusted by large organizations such as AP-HP and Leroy Merlin to improve their data analysis and decision-making processes.

Concentric AI

Concentric AI is a Managed Data Security Posture Management tool that utilizes Semantic Intelligence to provide comprehensive data security solutions. The platform offers features such as autonomous data discovery, data risk identification, centralized remediation, easy deployment, and data security posture management. Concentric AI helps organizations protect sensitive data, prevent data loss, and ensure compliance with data security regulations. The tool is designed to simplify data governance and enhance data security across various data repositories, both in the cloud and on-premises.

Kiln

Kiln is an AI tool designed for fine-tuning LLM models, generating synthetic data, and facilitating collaboration on datasets. It offers intuitive desktop apps, zero-code fine-tuning for various models, interactive visual tools for data generation, Git-based version control for datasets, and the ability to generate various prompts from data. Kiln supports a wide range of models and providers, provides an open-source library and API, prioritizes privacy, and allows structured data tasks in JSON format. The tool is free to use and focuses on rapid AI prototyping and dataset collaboration.

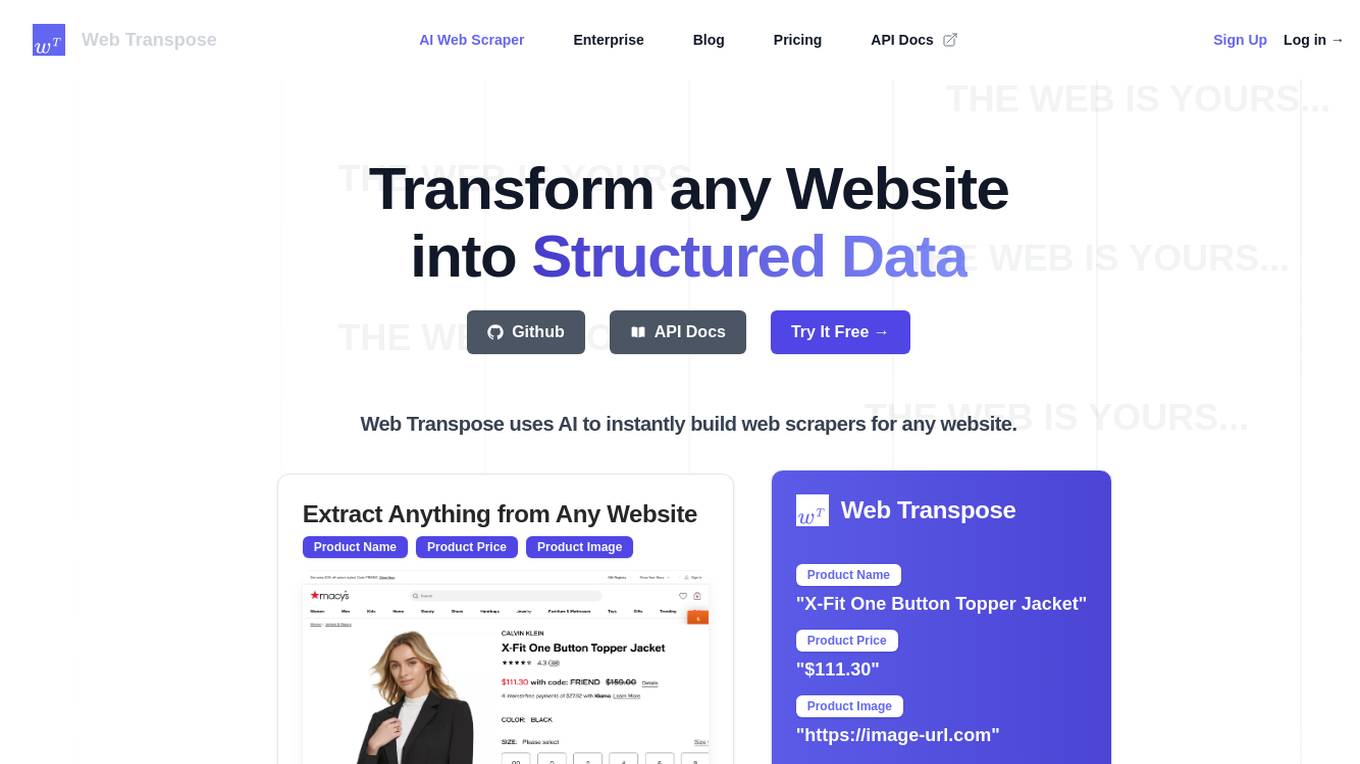

Web Transpose

Web Transpose is an AI-powered web scraping and web crawling API that allows users to transform any website into structured data. By utilizing artificial intelligence, Web Transpose can instantly build web scrapers for any website, enabling users to extract valuable information efficiently and accurately. The tool is designed for production use, offering low latency and effective proxy handling. Web Transpose learns the structure of the target website, reducing latency and preventing hallucinations commonly associated with traditional web scraping methods. Users can query any website like an API and build products quickly using the scraped data.

GetOData

GetOData is an AI-based data extraction tool designed for small-scale scraping. It allows users to discover and compare over 4,000 APIs for various use cases. The tool offers Apify Actors for extracting structured listings from any website and a Chrome Extension for seamless data extraction. With features like AI-based data extraction, side-by-side API comparisons, and automated scrolling for data collection, GetOData is a powerful tool for web scraping and data analysis.

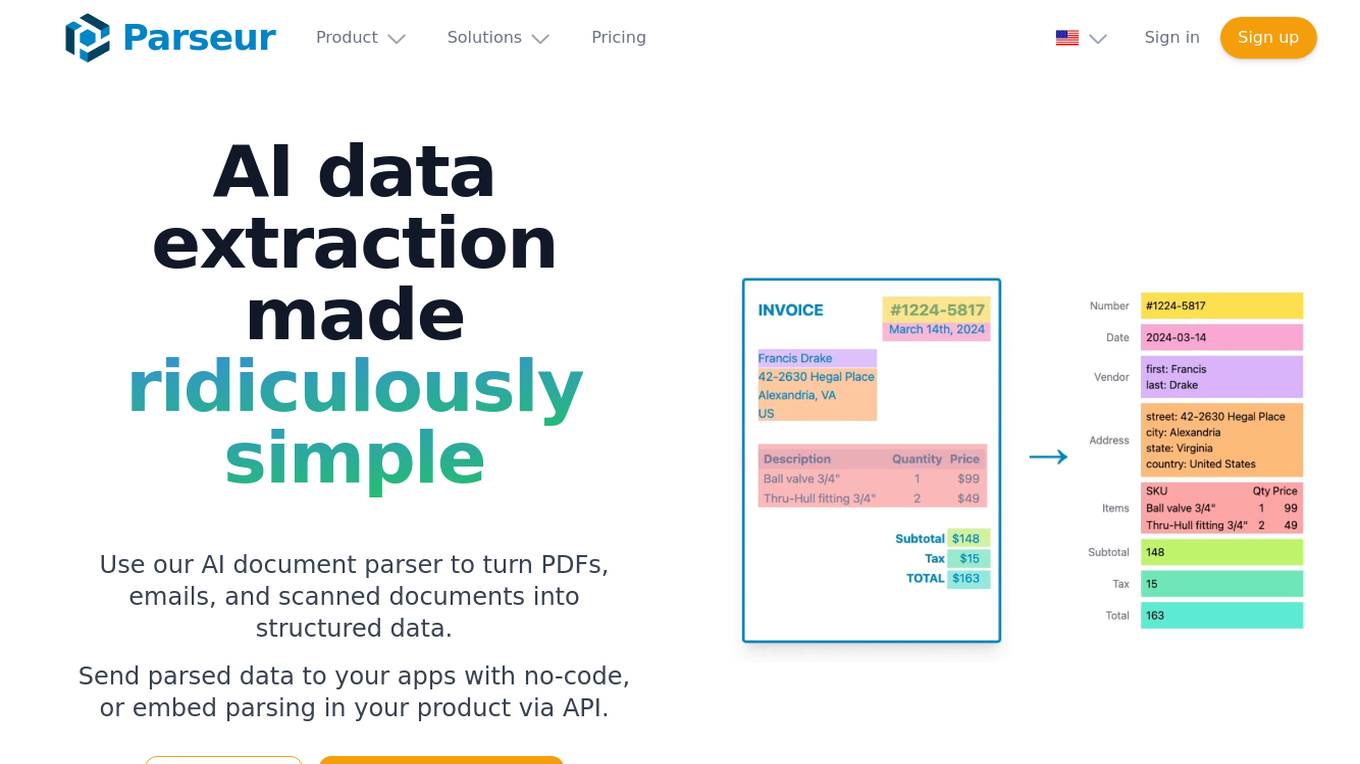

Parseur

Parseur is an AI data extraction software that uses artificial intelligence to extract structured data from various types of documents such as PDFs, emails, and scanned documents. It offers features like template-based data extraction, OCR software for character recognition, and dynamic OCR for extracting fields that move or change size. Parseur is trusted by businesses in finance, tech, logistics, healthcare, real estate, e-commerce, marketing, and human resources industries to automate data extraction processes, saving time and reducing manual errors.

Functional Data Structures Tutor

Tutor on purely functional data structures and functional programming

Algorithm GPT

Expert in algorithms and data structures, providing clear and concise explanations.

FAANG.AI

Get into FAANG. Practice with an AI expert in algorithms, data structures, and system design. Do a mock interview and improve.

Missing Cluster Identification Program

I analyze and integrate missing clusters in data for coherent structuring.

Idea To Code GPT

Generates a full & complete Python codebase, after clarifying questions, by following a structured section pattern.

SFM2 Algorithm Forge

Effective DS & Algorithms coach (type "help" to start). "May the Forge be with you! 🚀"

Ultimate-Data-Science-Toolkit---From-Python-Basics-to-GenerativeAI

Ultimate Data Science Toolkit is a comprehensive repository covering Python basics to Generative AI. It includes modules on Python programming, data analysis, statistics, machine learning, MLOps, case studies, and deep learning. The repository provides detailed tutorials on various topics such as Python data structures, control statements, functions, modules, object-oriented programming, exception handling, file handling, web API, databases, list comprehension, lambda functions, Pandas, Numpy, data visualization, statistical analysis, supervised and unsupervised machine learning algorithms, model serialization, ML pipeline orchestration, case studies, and deep learning concepts like neural networks and autoencoders.

ai-data-science-team

The AI Data Science Team of Copilots is an AI-powered data science team that uses agents to help users perform common data science tasks 10X faster. It includes agents specializing in data cleaning, preparation, feature engineering, modeling, and interpretation of business problems. The project is a work in progress with new data science agents to be released soon. Disclaimer: This project is for educational purposes only and not intended to replace a company's data science team. No warranties or guarantees are provided, and the creator assumes no liability for financial loss.

Data-Science-EBooks

This repository contains a collection of resources in the form of eBooks related to Data Science, Machine Learning, and similar topics.

instructor-php

Instructor for PHP is a library designed for structured data extraction in PHP, powered by Large Language Models (LLMs). It simplifies the process of extracting structured, validated data from unstructured text or chat sequences. Instructor enhances workflow by providing a response model, validation capabilities, and max retries for requests. It supports classes as response models and provides features like partial results, string input, extracting scalar and enum values, and specifying data models using PHP type hints or DocBlock comments. The library allows customization of validation and provides detailed event notifications during request processing. Instructor is compatible with PHP 8.2+ and leverages PHP reflection, Symfony components, and SaloonPHP for communication with LLM API providers.

EDA-GPT

EDA GPT is an open-source data analysis companion that offers a comprehensive solution for structured and unstructured data analysis. It streamlines the data analysis process, empowering users to explore, visualize, and gain insights from their data. EDA GPT supports analyzing structured data in various formats like CSV, XLSX, and SQLite, generating graphs, and conducting in-depth analysis of unstructured data such as PDFs and images. It provides a user-friendly interface, powerful features, and capabilities like comparing performance with other tools, analyzing large language models, multimodal search, data cleaning, and editing. The tool is optimized for maximal parallel processing, searching internet and documents, and creating analysis reports from structured and unstructured data.

god-level-ai

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This is a drill for people who aim to be in the top 1% of Data and AI experts. The repository provides a routine for deep and shallow work sessions, covering topics from Python to AI/ML System Design and Personal Branding & Portfolio. It emphasizes the importance of continuous effort and action in the tech field.

instructor

Instructor is a tool that provides structured outputs from Large Language Models (LLMs) in a reliable manner. It simplifies the process of extracting structured data by utilizing Pydantic for validation, type safety, and IDE support. With Instructor, users can define models and easily obtain structured data without the need for complex JSON parsing, error handling, or retries. The tool supports automatic retries, streaming support, and extraction of nested objects, making it production-ready for various AI applications. Trusted by a large community of developers and companies, Instructor is used by teams at OpenAI, Google, Microsoft, AWS, and YC startups.

Fueling-Ambitions-Via-Book-Discoveries

Fueling-Ambitions-Via-Book-Discoveries is an Advanced Machine Learning & AI Course designed for students, professionals, and AI researchers. The course integrates rigorous theoretical foundations with practical coding exercises, ensuring learners develop a deep understanding of AI algorithms and their applications in finance, healthcare, robotics, NLP, cybersecurity, and more. Inspired by MIT, Stanford, and Harvard’s AI programs, it combines academic research rigor with industry-standard practices used by AI engineers at companies like Google, OpenAI, Facebook AI, DeepMind, and Tesla. Learners can learn 50+ AI techniques from top Machine Learning & Deep Learning books, code from scratch with real-world datasets, projects, and case studies, and focus on ML Engineering & AI Deployment using Django & Streamlit. The course also offers industry-relevant projects to build a strong AI portfolio.

awesome-llm-json

This repository is an awesome list dedicated to resources for using Large Language Models (LLMs) to generate JSON or other structured outputs. It includes terminology explanations, hosted and local models, Python libraries, blog articles, videos, Jupyter notebooks, and leaderboards related to LLMs and JSON generation. The repository covers various aspects such as function calling, JSON mode, guided generation, and tool usage with different providers and models.

ollama-r

The Ollama R library provides an easy way to integrate R with Ollama for running language models locally on your machine. It supports working with standard data structures for different LLMs, offers various output formats, and enables integration with other libraries/tools. The library uses the Ollama REST API and requires the Ollama app to be installed, with GPU support for accelerating LLM inference. It is inspired by Ollama Python and JavaScript libraries, making it familiar for users of those languages. The installation process involves downloading the Ollama app, installing the 'ollamar' package, and starting the local server. Example usage includes testing connection, downloading models, generating responses, and listing available models.

fenic

fenic is an opinionated DataFrame framework from typedef.ai for building AI and agentic applications. It transforms unstructured and structured data into insights using familiar DataFrame operations enhanced with semantic intelligence. With support for markdown, transcripts, and semantic operators, plus efficient batch inference across various model providers. fenic is purpose-built for LLM inference, providing a query engine designed for AI workloads, semantic operators as first-class citizens, native unstructured data support, production-ready infrastructure, and a familiar DataFrame API.

OneKE

OneKE is a flexible dockerized system for schema-guided knowledge extraction, capable of extracting information from the web and raw PDF books across multiple domains like science and news. It employs a collaborative multi-agent approach and includes a user-customizable knowledge base to enable tailored extraction. OneKE offers various IE tasks support, data sources support, LLMs support, extraction method support, and knowledge base configuration. Users can start with examples using YAML, Python, or Web UI, and perform tasks like Named Entity Recognition, Relation Extraction, Event Extraction, Triple Extraction, and Open Domain IE. The tool supports different source formats like Plain Text, HTML, PDF, Word, TXT, and JSON files. Users can choose from various extraction models like OpenAI, DeepSeek, LLaMA, Qwen, ChatGLM, MiniCPM, and OneKE for information extraction tasks. Extraction methods include Schema Agent, Extraction Agent, and Reflection Agent. The tool also provides support for schema repository and case repository management, along with solutions for network issues. Contributors to the project include Ningyu Zhang, Haofen Wang, Yujie Luo, Xiangyuan Ru, Kangwei Liu, Lin Yuan, Mengshu Sun, Lei Liang, Zhiqiang Zhang, Jun Zhou, Lanning Wei, Da Zheng, and Huajun Chen.

Awesome_Mamba

Awesome Mamba is a curated collection of groundbreaking research papers and articles on Mamba Architecture, a pioneering framework in deep learning known for its selective state spaces and efficiency in processing complex data structures. The repository offers a comprehensive exploration of Mamba architecture through categorized research papers covering various domains like visual recognition, speech processing, remote sensing, video processing, activity recognition, image enhancement, medical imaging, reinforcement learning, natural language processing, 3D recognition, multi-modal understanding, time series analysis, graph neural networks, point cloud analysis, and tabular data handling.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

cosdata

Cosdata is a cutting-edge AI data platform designed to power the next generation search pipelines. It features immutability, version control, and excels in semantic search, structured knowledge graphs, hybrid search capabilities, real-time search at scale, and ML pipeline integration. The platform is customizable, scalable, efficient, enterprise-grade, easy to use, and can manage multi-modal data. It offers high performance, indexing, low latency, and high requests per second. Cosdata is designed to meet the demands of modern search applications, empowering businesses to harness the full potential of their data.

mcp-redis

The Redis MCP Server is a natural language interface designed for agentic applications to efficiently manage and search data in Redis. It integrates seamlessly with MCP (Model Content Protocol) clients, enabling AI-driven workflows to interact with structured and unstructured data in Redis. The server supports natural language queries, seamless MCP integration, full Redis support for various data types, search and filtering capabilities, scalability, and lightweight design. It provides tools for managing data stored in Redis, such as string, hash, list, set, sorted set, pub/sub, streams, JSON, query engine, and server management. Installation can be done from PyPI or GitHub, with options for testing, development, and Docker deployment. Configuration can be via command line arguments or environment variables. Integrations include OpenAI Agents SDK, Augment, Claude Desktop, and VS Code with GitHub Copilot. Use cases include AI assistants, chatbots, data search & analytics, and event processing. Contributions are welcome under the MIT License.